If you are an Amazon Web Services customer, you are likely familiar now with the massive reboots sweeping across their infrastructure. If your account manager did not proactively notify you, your first warning sign might have been the arrival of a new API call: DescribeInstanceStatus, which provides details on whether a host or instance requires a reboot, or whether a host is scheduled for retirement.

Based on the advice of my devops manager, we added a feature to our cloud asset management tool to assess the impact on our infrastructure. The result: 350+ nodes in four AZs had to be rebooted within a 10 day period. The average reboot window was 5 hours, spanning global business hours, and the average advance notice ranged by zone from 3-10 days.

The good news is we architected for this type of failure; the bad news is managing the logistics during the holiday season has been less than ideal. To add to the challenge, the reboots seemed rushed by Amazon.

Over the last week we made the following observations:

- Notification - I read a blog post from Loggly where their AWS reboot email notifications were caught in a spam folder. In our case, we simply did not receive notification for all of our reboots. Fortunately the API call proved to be reliable - although required daily review since scheduled reboots periodically changed.

- Timing of notification - We received at least partial email notice 7-10 days in advance in our US availability zones, 3 days in Europe, and none in Asia. I would have hoped for at least a week across all AZs.

- Reboot window - We had one occasion where the reboot window Amazon provided seemed to not be honored. In this case, an engineer went to proactively initiate a reboot at the defined time, only to find the instance had been rebooted prior to the committed window.

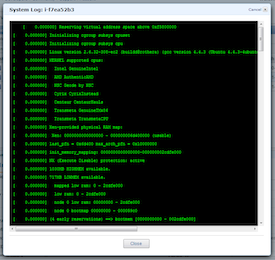

- Time to reboot - While most of our nodes came back in 5-10 minutes, some took 30-60 minutes - and in a few cases, the nodes never returned at all.

- EBS - Block storage performance on AWS can be highly variable in the best of times, but it seems the reboots have pushed it to new lows. It’s possible some reboots are resulting in instance relocation, causing a degradation in the overall performance of EBS.

The rumors are abound across the blogosphere on what is driving these reboots. While I can't provide a definitive cause, it's clear this initiative was time sensitive, resulting in execution that fell below the generally high standards I expect from Amazon.

As of today, we have 45 nodes left to reboot, so the end is finally in sight. While the experience has done nothing to shake my belief in public clouds, it does make me realize how much we now take for granted from our cloud fabric. We, like Amazon, will be glad to have this behind us - and I suspect we will both be more prepared for the next time a cloud doesn’t feel like a cloud.